A Brief History Of Antibiotic Resistance: How A Medical Miracle Turned Into The Biggest Public Health Danger Of Our Time

Antibiotics, also known as antibacterials and antimicrobials, revolutionized medicine during the later half of the 20th century. But as time goes on, the success of antibiotics may be completely cancelled out by their combative counterparts: resistant bacteria.

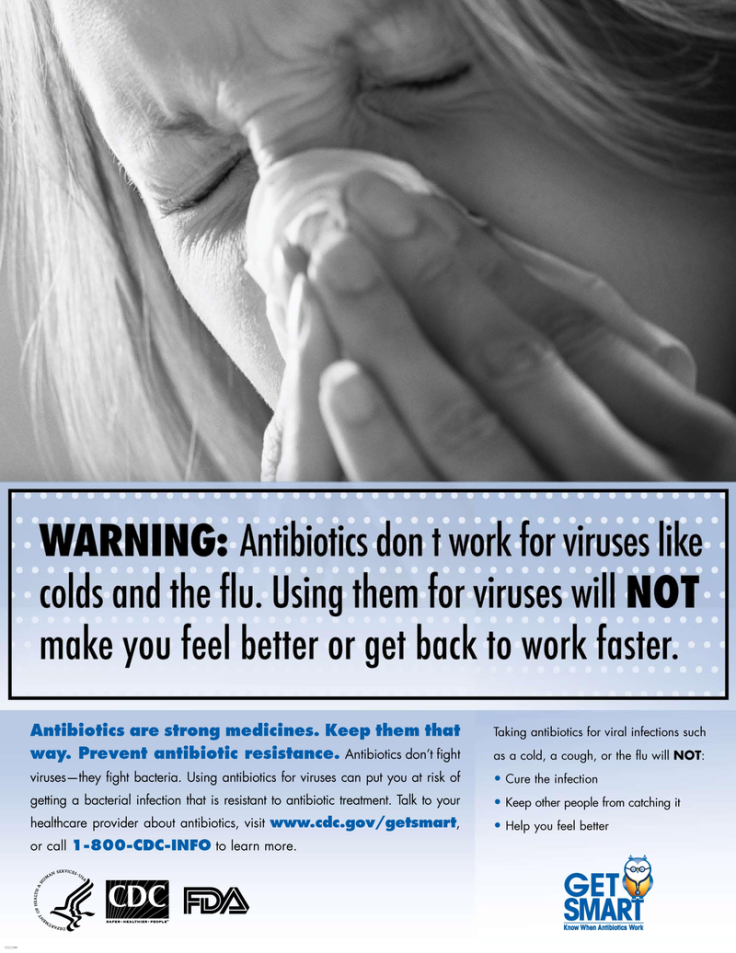

Humans have overused antibiotics simply by harnessing them to treat all sorts of infections from pneumonia to strep throat — but also by sometimes taking too much of them, or infusing them into agricultural systems, animals, and food products , as the CDC illustrates . This overuse has opened the door for bacteria to evolve into resistant strains to beat out drugs. We’re now in a place where changes desperately need to be made in order to prevent millions of deaths from antibiotic resistance in the coming decades. These microscopic microbes seem to be smarter than us.

The authors of a 2010 report on the evolution of resistance note that microbes have “extraordinary genetic capabilities” that benefit “from man’s overuse of antibiotics to exploit every source of resistance genes... to develop [resistance] for each and every antibiotic introduced into practice clinically, agriculturally, or otherwise.” Here’s how antibiotics went from being a revolutionizing medical breakthrough to a massive public health concern.

Which Came First, The Antibiotic Or The Resistance?

The antibiotic era, as it’s called, may not have begun until the 21st century, but antibiotics were still in use in ancient folk medicine. The term “antibiotic” has an extremely broad definition, describing the activity of any compound or chemical that can be applied to kill or inhibit bacteria that cause infectious diseases.

350-550 CE. The earliest traces of antibiotic use date back thousands of years. Tetracycline — a common antibiotic still used today — has been found in skeletons from Sudanese Nubia, an area that included ancient Egypt. Researchers believe that ancient Nubians were actually brewing tetracycline into their beers or otherwise incorporating it into their diets over a long period of time because the compound was found embedded deep in their bones and the population’s documented infectious diseases seem to be quite low. This discovery overturned the commonly-held belief that antibiotics didn’t exist before 1928.

It’s tough to detect other ancient antibiotics aside from tetracyclines, however, as most didn’t settle into bones and tooth enamel in the same way. Only documentation and anecdotes remain to give us insights into the use of other ancient antibiotics. What is now known to be an antimalarial drug, artemisinin, was used in ancient Chinese medicine, and it’s possible that herbalists also used moldy bread to prevent wounds from getting infected. There’s also historical evidence that, in Jordan, red soils rich in antibiotic-producing bacteria were used to treat skin infections like rashes.

And for as long as antibiotics have existed, bacterial resistance has existed alongside them — but never on such a large scale. “The natural history of antibiotic resistance genes can be revealed through the phylogenetic reconstruction,” the authors of one study write, “and this kind of analysis suggests the long-term presence of genes conferring resistance to several classes of antibiotics in nature well before the antibiotic era.” In short, bacteria have always been doing what they’re good at: finding ways to survive.

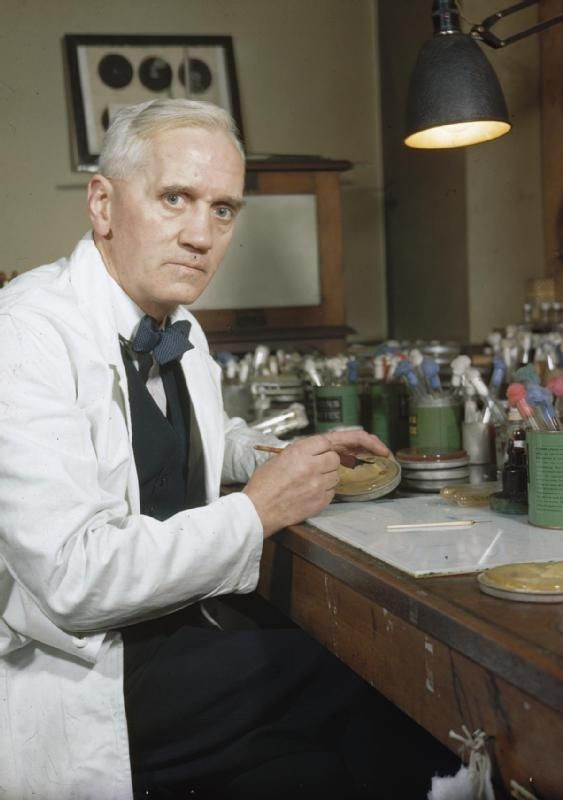

1928. Alexander Fleming, a Scottish biologist, took the fight against infections to a new level when he identified penicillin, making this the year that the modern antibiotic era began.

The discovery had apparently been an accident. Fleming had left an uncovered Petri dish of Staphylococcus bacteria near an open window in his basement lab, and in the morning found that mold growth had inhibited the bacteria. Penicillin, which comes from a type of fungi known as Penicillin rubens , became the first compound to be used officially as an antibiotic.

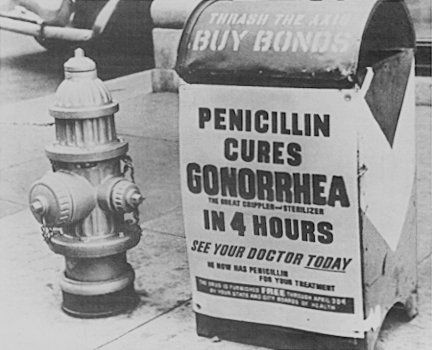

1943. Penicillin was on its way to mass production and was used heavily to treat Allied troops fighting in Europe during World War II. Considered a miracle drug, it was soon available to the public, despite warnings from Alexander Fleming that overuse could lead to mutant bacteria. Because bacteria can transfer genes horizontally — from one bacteria to another immediately — its ability to share resistance was adding to a growing threat unheeded by many scientists and doctors of the day.

1948. Transferring antibiotic use from medicine to agriculture also happened in a rather accidental way. Robert Stokstad, an animal nutritionist, and Thomas Jukes, a biochemist, were working at the company Lederle to develop an “animal protein factor” that could enhance chicken growth and boost poultry profits. The researchers were initially experimenting with vitamin B12, which was believed to boost animal growth, but eventually found that the cellular remains of Streptomyces aureofaciens bacteria — from which tetracyclines are extracted — contained a lot of the vitamin. As the Lederle labs had also discovered the first tetracycline, the researchers had access to plenty of these bacterial remains. Chicks that received supplements including Streptomyces auerofaciens grew 24 percent more than those receiving liver extract, which also contained high levels of B12. The bacteria shells, which still contained traces of the antibiotics, were more effective in making them grow (and much cheaper than liver extract). This discovery jumpstarted the process of routinely injecting antibiotics into animals.

Meanwhile, resistant staph bacteria were growing in hospitals. According to Harvard Magazine , resistant staph infections in hospitals had risen from 14 percent in 1946 to 59 percent in 1948.

1952. By this time, doctors were somewhat aware of the possibility of antibiotic resistance, but overall they remained hopeful and optimistic about the success of these new drugs, which could defeat diseases that had long plagued humanity, like cholera and syphilis. A study on antibiotic resistance published that year concluded that syphilis could be treated “without any indications of an increased incidence of [resistant] infections, and this work gives grounds for hoping that the widespread use of penicillin will equally not result in an increasing incidence of infections resistant to penicillin.”

1950s–1970s. The next couple decades were considered the golden era of antibiotics, due to the sheer number of new drugs that were being developed: streptomycin, to treat serious infections like endocarditis and the plague; ampicillin, which treats respiratory tract infections and meningitis; and dozens of others. In the excitement surrounding the success of these antibiotics, they were made readily available to the public, like penicillin, ultimately paving the way for resistance to develop more easily.

The Tide Turns, The Superbugs Arrive

1955. As Fleming had predicted, resistance to penicillin gradually built up due to the accessibility of the drug. By 1955, many countries had attempted to slow this resistance by limiting penicillin use to prescription only, but it was too little too late: many bacterial strains had already defeated the antibiotic, including staphylococci.

1960. In an attempt to defeat penicillin-resistant strains, scientists developed methicillin, a different antibiotic in the penicillin class that could work against resistance. But within a year, bacterial strains developed resistance to methicillin too — eventually called MRSA , methicillin-resistant Staphylococcus aureus , or S. aureus . Now, MRSA can resist most antibiotics, and infections are common in hospitals — making it one of the biggest forerunners of multiple-drug resistant (MDR) bacteria.

1976. At Tufts University, a physician and researcher named Stuart Levy became one of the first to identify antibiotic resistance due to their use in animals, and to clamor for greater awareness of the problem. He worked on a study that year that examined how small amounts of antibiotics in animal feed could cause resistance in humans. On a farm in Massachusetts, Levy fed tetracycline to chickens, and found that tetracycline-resistant bacteria began to populate the chickens’ gut flora within a week. Despite this, rampant use of antibiotics like vancomycin (which had previously been considered a “last resort” drug) throughout the 1980’s led to an epidemic of resistant strains on farms and among humans.

1990s. A stronger resistant strain of MRSA began sickening normal, healthy people in the 1990s. This perhaps created a greater public awareness of the danger of antimicrobial resistance.

By 2002 , up to 60 percent of S. aureus cases in hospitals were resistant to methicillin. In 2005 , over 100,000 Americans were stricken with MRSA infections and some 20,000 died, more than the amount of people who were dying from HIV and tuberculosis combined, according to Harvard Magazine .

2012. As more researchers began working on the impending antibiotic-resistant epidemic, they had to tackle the classification of multidrug-resistant bacteria, which were multiplying by the minute. In a 2012 study , a team of scientists proposed adding the terms extensively drug-resistant (XDR) and pandrug-resistant (PDR) to multidrug-resistant (MDR) bacteria to better help them classify and potentially defeat these superbugs. It was the first time that researchers had a unified set of definitions for MDR bacteria to better understand them.

2013. After decades of certain researchers calling for action, the FDA finally implemented a plan to phase out certain antibiotic use in animals. But the extent to which this plan is effective at reducing the massive damage already done is difficult to identify.

2014. In response to major superbug outbreaks like Klebsiella pneumoniae (which causes pneumonia and bloodstream infections in the hospital) and gonorrhea strains all over the world, the World Health Organization (WHO) released a statement noting that “this serious threat is no longer a prediction for the future, it is happening right now in every region of the world and has the potential to affect anyone, of any age, in any country.”

2015. McDonald's announced that it would phase out all meat sources that contained antibiotics, marking the first step of a major fast food company to heed the public health warnings and take action.

Since the 70s, very few new antimicrobial agents have been discovered, and the only way for researchers to combat resistant bacteria has been to modify already existing antibiotics. This has led to a standstill of sorts between current antibiotics and the rapidly adjusting “superbugs.”

Today, from farms to hospitals to everyday workplaces, animals and humans are likely teeming with different forms of resistant bacteria. In 2013, a Consumer Reports investigation showed that over half of ground turkey meat sold in the U.S. contained strains of drug-resistant bacteria. According to the CDC , some 2 million people in the U.S. are infected with drug-resistant bugs every year, and 23,000 of them die from these infections. Those numbers are likely to get worse in the coming decades, according to recent reports .

The danger of the situation is mainly in its complexity, Rustav Aminov writes in a 2010 report on antibiotic resistance: “It is not a single grand challenge; it is rather a complex problem requiring concerted efforts of microbiologists, ecologists, health care specialists, educationalists, policy makers, legislative bodies, agricultural and pharmaceutical industry workers, and the public to deal with. In fact, this should be of everyone's concern, because, in the end, there is always a probability for any of us at some stage to get infected with a pathogen that is resistant to antibiotic treatment.”