Autism Glass Project: Researchers At Stanford Are Using Google Glass To Help Treat Autism In Children

It wouldn’t be too surprising if by now you’d forgotten all about Google Glass. It launched with relative fanfare a few years ago but never really seemed to make the impression Google was hoping for. So, they quietly scaled back the marketing of the device to the point that many people thought it was dead — that was, at least, until they revealed earlier this year that it was still alive and well. Not everyone forgot about it, however; one group of scientists at Stanford University are now using it to help treat autism in children.

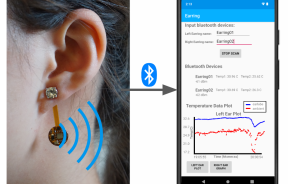

In what’s called the Autism Glass Project, researchers are combining face-tracking technology with machine learning to build at-home treatments for autism. In other words, Google Glass’ software learns to identify people’s faces and their emotional expressions — what project founder Catalin Voss calls “action units” — and then classifies them with specific words. This in turn helps the user recognize other people’s emotions. Autism, which affects one in 68 children, is characterized by the inability to recognize emotions in facial expressions, among other symptoms. This in turn makes social interactions and developing friendships difficult to create and sustain.

The researchers’ first phase of their study took place last year in the Wall Lab at the Stanford School of Medicine and included 40 experiments. Researchers focused mainly on the interactions between children with autism and computer screens, as the studies were limited due to Stanford only having one Google Glass available for use. Google has since donated 35 more devices, while the Packard Foundation has donated $379,408 in grants.

Now in its second phase, the Autism Glass Project includes 100 children and aims to determine how effective the system is as an at-home autism treatment. While the team is already finding it difficult to classify images of people’s faces into their respective emotions, they say the bigger challenge is ensuring that children experience a measurable increase in emotional recognition when not using the device. To do this, the team used a game developed at MIT’s Media Lab, called Capture the Smile.

Meant to be played while wearing Google Glass, Capture the Smile asks children to find people with specific emotional expressions nearby. Using the game along with video analysis and parental questionnaires, the researchers were able to get a “quantitative phenotype” for each child — providing researchers with more information on each child’s physical symptoms of autism. Over time, the researchers will be able to show how playing the game will improve emotion recognition in the participants.

The second phase of the study asks children to wear Glass for three 20-minute sessions daily. The researchers can observe what the children are looking at during this time, which then gives them a better understanding of how eye contact plays a role in emotion detection. Voss also said that parents will be more involved in this phase of the study compared to the first. “You can start to ask questions, of the percentage of time that the child is talking to his mom, how much is the child looking at her?” Voss told TechCrunch.

As exciting as it is, Voss explained this study is only the first of many steps. To make the therapy more widespread, researchers will need to collect clinical data to see if their system works outside of the lab and obtain a code from the American Medical Association. Only then will the technology and techniques improve and the number of children getting help grow.

If you want to read more or apply for the Autism Glass Project, check out its website.