Psychotherapy Does Work For Depression Patients, But Its Effectiveness May Be Overstated In Studies

It seems that psychology researchers may have a problem with turning in all their completed work to be graded, according to a new analysis published Wednesday in PLOS ONE.

The study authors found that a significant portion of publicly funded research intended to determine how effective psychotherapy is in the treatment of major depression has gone unpublished — almost 25 percent of it. Worse yet, when these missing studies are added back into the equation, it turns out that the benefits of therapy for depression, while still there, aren’t as strong as we’ve been led to believe.

"This doesn't mean that psychotherapy doesn't work. Psychotherapy does work. It just doesn't work as well as you would think from reading the scientific literature," said study author Steven Hollon, Gertrude Conaway Professor of Psychology at Vanderbilt University, in a statement.

Missing Work

Building on earlier research that found a similar overestimation effect for pharmacological treatments for depression, the authors performed an extensive scouring of all proposed studies funded by the National Institutes of Health (NIH) from 1972 to 2008 that explicitly measured how effective any one psychotherapy was for major depression, so long as it was intended to be a randomized, controlled trial that recruited adults aged 18 or older.

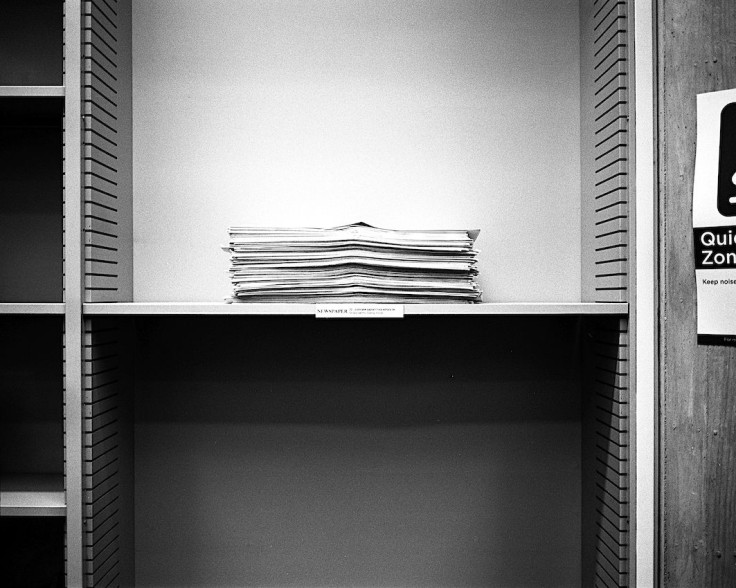

The publicly available database of NIH-funded research allowed them to track which of these studies had ultimately been published somewhere and which ones never saw the light of day. Of the 57 grants given by the NIH, 42 led to a published study, 13 led to a completed but unpublished study, and two never left the planning stages. They then conducted a large review, both without the missing studies and with them included, finding that the unpublished but still perfectly valid studies weighed down the positive results of the rest.

When Hollon and his team went back and asked the original researchers why they chose not to publish, they often replied that they simply didn’t think their findings were interesting enough or were distracted by other obligations. Only two studies were ever put up for peer review, though ultimately rejected (the researchers of three studies expressed a wish to someday publish their work).

Disappointing as those responses may be, they’re part of a larger systemic problem within many fields of science — a so-called “publication bias” where researchers feel compelled, often inadvertently, to only publish flashy, positive studies and shelve away less impressive findings. It’s a bias that can have some serious repercussions in the scientific world. "It's like flipping a bunch of coins and only keeping the ones that come up heads," Hollon said.

Citing PLOS ONE itself, an open access journal that is “committed to publishing study results without regard to their strength and direction,” as an example of how the culture around publishing negative studies is slowly changing, the authors nonetheless believe that there need to be more proactive steps taken to ensure that fellow scientists and the public are provided the clearest, unrosy, picture of scientific research.

“[W]e doubt that simple exhortations to authors or editors will do enough to change behavior. Instead, we join with others who recommend that funding agencies or journals should archive both original protocols and raw data from any funded randomized clinical trial,” they wrote.

In the meantime, “clinicians, guidelines developers, and decision makers should be aware of overestimated effects of the predominant treatments for major depressive disorder.”

Source: Driessen E, Hollon S, Bockting C, et al. Does Publication Bias Inflate the Apparent Efficacy of Psychological Treatment for Major Depressive Disorder? A Systematic Review and Meta-Analysis of US National Institutes of Health-Funded Trials. PLOS ONE. 2015.

Published by Medicaldaily.com